Table of Contents

ToggleWhat Is Sitemap and Robots.txt?

While design and content take center stage, sitemaps and robots.txt files work behind the scenes to guide search engines through your website.

These two elements are crucial in determining how search engines crawl, index, and display your website’s information Though invisible to regular users, they form the backbone of how web crawlers interact with your site’s content. Let’s explore what each file does, how they work, and why they matter for every website.

What Is a Sitemap?

Sitemap is a specially formatted file, usually in XML, that supplies search engines with a structured list of all the important pages on your website. Think of it as a blueprint or index that guides search engine spiders to the most important pages, media, and content on your site.

Instead of relying only on internal links, search engines use sitemaps to identify and prioritize what to visit and understand how your site is organized.

Types of Sitemaps

Image Sitemaps – Highlight important image content.

Video Sitemaps – Specify video files and relevant metadata.

News Sitemaps – Used by sites that publish news articles regularly.

What a Sitemap Does

Lists essential content on your website.

Helps crawlers discover pages that might not be easily accessible via regular navigation.

Supports large and dynamic websites with better page discovery.

What Is Robots.txt?

The robots.txt file is a simple, plain-text file that tells search engine crawlers which parts of your website they’re allowed—or not allowed—to visit. It’s like a digital instruction sheet for bots.

Basic Example

Disallow: /private/

User-agent: – Applies to all crawlers.

Disallow: /private/ – Tells bots not to access any content in the “private” folder.

Allow: /blog/ – Grants permission to crawl the blog section.

Example: https://digitaltriumphs.com/robots.txt

When Robots.txt Is Useful

Prevents access to sensitive or confidential areas like admin pages.

Avoids wasting crawler time on low-value pages.

Limits duplicate content from being processed multiple times.

Keeps staging or test versions of pages hidden.

Difference between sitemap and robots.txt?

Feature | Purpose | Format | Function | Visibility |

|---|---|---|---|---|

| Sitemap | Lists pages and content to visit | XML | Helps with discovery | Actively submitted or referenced |

| Robots | Tells bots where not to go | Plain text | Controls crawling | Automatically found |

Why These Files Matter

Even though they work in the background, both files shape how web crawlers interact with your site. When used correctly, they.

Ensure the most important pages are visited.

Keep unwanted or sensitive areas private.

Improve how information is processed.

Give better control over what’s accessible online.

They’re especially helpful for large websites, blogs with hundreds of posts, or e-commerce platforms with thousands of product pages.

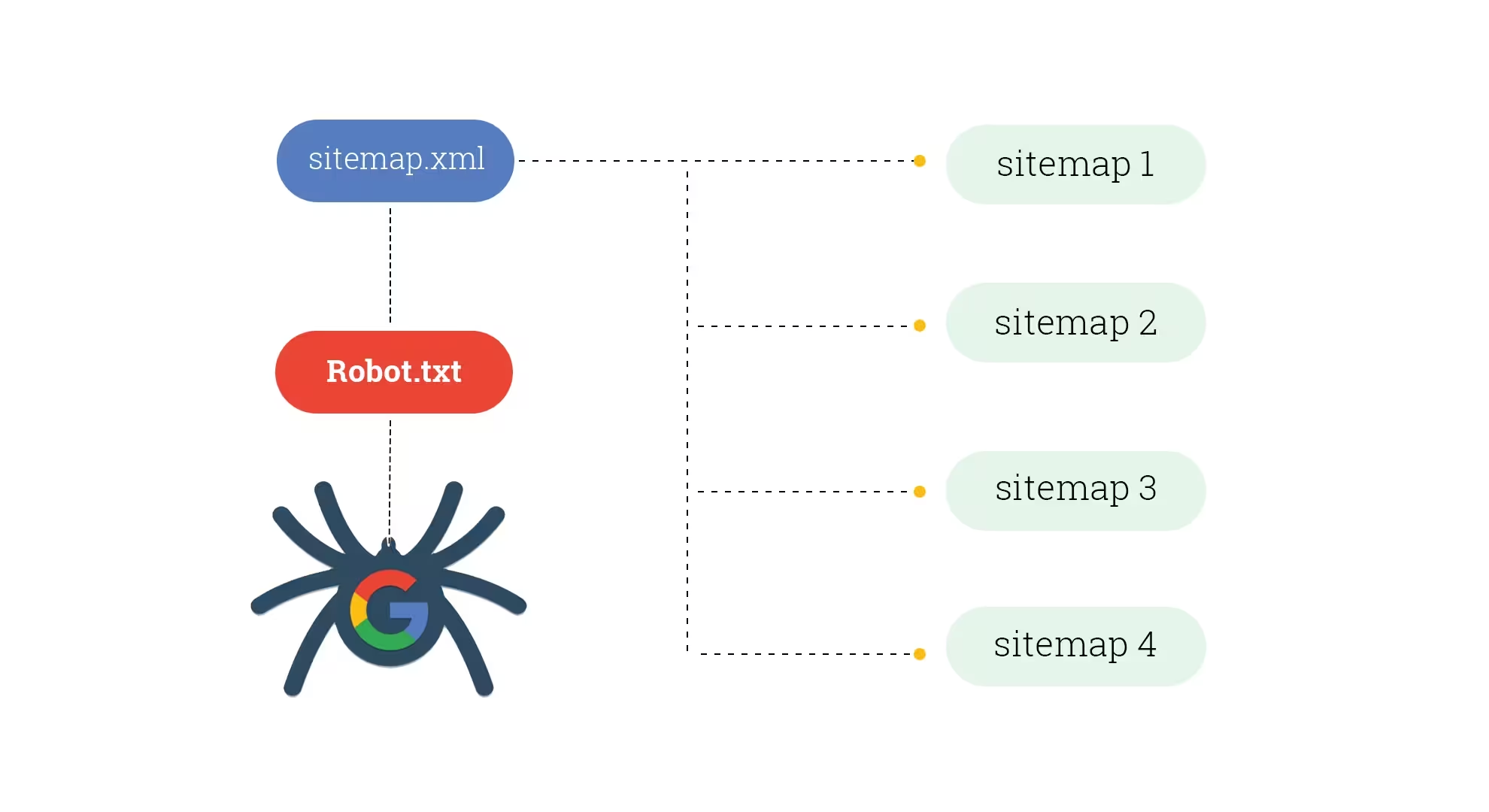

How They Work Together

Sitemap points to the content that matters most.

Robots.txt blocks irrelevant or sensitive content.

Together, they create a focused, efficient crawling path.

Also, you can include your sitemap in the robots.txt file.

This ensures that any crawler reading your robots.txt also gets quick access to your sitemap.

Best Practices

Here are some key tips to make the most out of both files:

Keep them in the root directory so bots can find them easily.

Don’t block pages you want crawled, especially if they’re in your sitemap.

Use clear and simple formatting to avoid confusion.

Update the sitemap regularly as you add or remove content.

Test both files using online tools and webmaster platforms to ensure accuracy.

Conclusion

Sitemap and robots.txt files are like the behind-the-scenes staff of a well-run website—they don’t appear on the homepage, but they keep everything running smoothly. The sitemap offers direction, while the robots.txt file enforces access rules.

By using both, you help ensure that your content is discoverable, your private sections remain private, and that everything runs in a structured, organized way. Whether you run a blog, online store, or portfolio, understanding and maintaining these two files is essential for a good web.